Yes, light is a wave, and then …

Wave, is a phenomenon in which there is energy transmission without transport of matter. It’s like energy traveling in space. It is easier to understand if we think of an example where there is matter transporting energy. For example, to drop a stack of cans in a bazaar, a person uses a ball of socks and flings it against the stack. That means, it gives energy to the ball, ball travels about five meters charged with this energy because air consumes very little of it, and reaches the pile of cans communicating some of this energy by the shock or collision. As the equilibrium of the stack is something precarious, it falls apart. That’s power transmission with transport of matter. A stroller being pushed, a hammer hitting a nail, the typing on a keyboard … all of this is to communicate energy from one physical body to another through direct contact in which one forces the other, performs a work, as we say in physics.

Someone could bring down the stack of cans by placing very powerful speakers about the same five meters away and perhaps with a suitable sound would make the pile tremble and fall. In this case, there would be power transmission, evident since the stack fell, but without transmission of matter. It was only the vibration from the speakers that made the air tremble, but the air did not “go” from the speaker “to” the cans, it only served as a means for this vibration that was moving from molecule to molecule to reach and vibrate the cans.

A wave is a vibration of a physical parameter of a medium that can be air, a membrane, a material or a string. In such cases the so called mechanical waves, the parameter is a certain elasticity between the molecules of each medium. The molecules act as a network of micro springs, pushing one another, so will the wave go moving through the material.

Well, that is the mechanical wave. Now about the wave nature of light, it is less intuitive because the vibration occurs in the electric and magnetic fields values and the medium is any transparent material or even a vacuum. Like with sound, we have layers that spread from the source with oscillating values of fields called electromagnetic. For sound, that would be the compression of the molecules, for light the electromagnetic fields vary in intensity and this disturbance moves through the medium which are transparent to them.

(Prism image above is from Wikipedia – Autor D-Kuro)

Intensity and frequence

As any wave, light may be defined by two quantities that are intensity and frequency or intensity and wavelength. Intensity is a generic term I am using to mean how the vibration is strong. Intensity is connected to energy and to wave amplitude. Wavelength is the distance between two crests. Frequency is how often the physical quantity in question oscillates per second. Vibrations per second has a name that is Hertz. We could count frequency as vibrations per minute or hours, but seconds are generally suitable and generate more modest values. Wavelengths are generally measured in meters.

Light can then be measured and characterized by intensity and wavelength (wavelength is more usually used than frequency). The same happens with the sound (for which, in general, it is preferred frequency).

When sound changes frequency it goes from bass to treble or otherwise. The bass guitar is in the lower frequency range and the violin in the high ones. We have this perception related to the frequency of sound waves. When sound changes in intensity, it can range from weak to strong, from almost inaudible to deafening, or otherwise. In the case of light, our wave detector is quite different from our sound detector, and that brings up the a different story that is the perception of colors.

Note that those are measurements as the weight (mass) is in kilograms, or distances in meters or time in seconds, minutes, etc. But for sound and light we do not use the numerical values of frequencies with the same ease that we use numerical values for weights, times and distances. About sounds we talk about pitch, low or high, and about light we perceive colors. But all are translatable into values on a numerical scale if we want and that is what we need, when we talk about photography or other technology dealing with colors.

Although the two share many similarities, there is a fundamental difference in the way our senses process sound and light. That’s what I find most difficult to understand: Why does the light spectrum brings this idea of regions while sound doesn’t?

Let’s use a picture to think about this difference. Above we have a colourful piano keyboard in which the two spectrums are represented from low to high frequencies, from left to right. The sound, if we are playing from the left of the keys to the right, is changing from low tones to the high ones, but uniformly, progressive and continuously. When we look at the colors and we go also moving from left to right, we see groups forming regions on the keyboard, color regions. In the two cases we are simply going from lower to higher frequencies. The blue keys produce sounds close in pitch, but do not form a family of a different nature of sounds like the blue form and stands out from other parts of the keyboard / spectrum. The point to understand is: why our brain understands frequency/wavelength bands of light as resembled, as grouped, when we have just a continuous scale of wavelengths?

How we perceive sound, light and colors

The answer lies in the nature of the organs receiving these two types of waves: ears and eyes, which are the detectors of sounds and light respectively. “Detecting” a wave, always has to do with something that vibrates or changes when hit by that wave. We say that this is to be sensitive to it. At the occurrence of such sensitization, this data is converted into electrical impulses and sent to the brain, where the physical/chemical transformation that happened in the receiver will be interpreted. In the case of the ears it is the vibration of the eardrum and in the case of light there are chemical reactions triggered by light, which occur on the retina’s surface.

The eardrum vibrates with sound waves, but does not vibrate with all of them. There is a minimum intensity to put it to vibrate and there is a maximum intensity threshold beyond which it starts to hurt and even damages may also occur. This lower and upper limits for intensities varies according to the frequency of the sound. We can’t hear all frequencies either. In general we start hearing about 20Hz and we can go up to 20,000 Hz. Out of that frequency range we are like deaf. Close to the boundaries of this band we hear badly and in the middle of it we have the best response. That means, there is a perceptual response curve of our ears. If we are subjected to sounds of the same intensity but varying frequencies, we barely hear those close to the horns of the audible spectrum and hear very well in its central portion, between 2,000 and 5,000 Hz.

That means we have for sound, one (1) type of detector, sensitive to a range of frequencies and intensities we call sound spectrum. It is a unique channel for bass, midrange and treble. A single “pair of numbers” which reports on frequency and volume, is sent to the brain. Each of these numbers range between minimum and maximum gradation and this is perceived and understood by the brain.

Here comes the difference we have in the case of light and that is responsible for colors: for light waves we have three (3) different channels that send information to the brain. But they do not “vibrate together” with the frequency of the light they receive. In our retina we have low light cells, that is for night vision, called rods and cells for daylight conditions, daytime vision, called cones. The latter are the ones that allow us to interpret ranges of wavelenghts in terms of colors. But cone cells only measure intensities and do not distinguish between wavelengths. While for each key you press in our colorful piano a single type of audible signal is captured by your years, when you look to the same key, that portion of the projected image on your retina, sends 3 different signals.

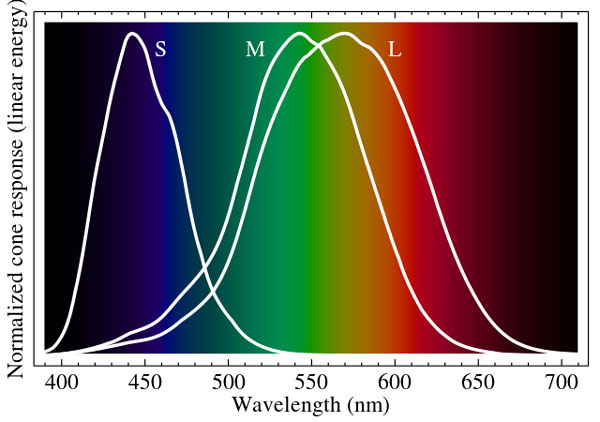

The cone cells exist in three different types. A first group responds well to waves called S (Short), another group M wave (Medium) and the latter is the L (Long). The cones S, M and L are like 3 sentinels placed in strategic points in the visible spectrum and they do not say which wavelength is coming, just say “is coming up, with such intensity.”

The chart above (source Wikipedia) shows the light spectrum ranging from 400 to 700 nm (nanometer, or 10-9 meters) in wavelength. The bell-shaped curves represent the sensitivity of each type of cone. The Short, when it receives light below 400 nm does not see anything, goes to zero, does not transmit anything. It is not stimulated by electromagnetic waves with frequencies lower than 400 nm. From that point on, they begin to show more and more sensitivity and around 420-440 nm is the best reaction. In these wavelength cones have the better efficiency in transforming the light into signals that go to the brain. But it does not distinguish anything within its range. It is only more or less sensitive depending on the wavelength and sends a signal which is the product of the intensity of the wave itself and its sensitivity to that wavelength. The same reasoning applies to Medium cones, which are in the best range around 534-555 nm, and Long has the best performance at 564-580 nm and are blinded from 700 nm.

Again, they do not say “Hey I am receiving in 500 nm, now I am receiving in 550 nm”, because the effect caused by light is not to make anything vibrate together as it happens with sound. The effect is a chemical reaction and then the signal is only kind of yes or no, and case yes, how much.

So let’s say our 3 sentinels placed on 430, 540 and 570 nm, are S, M and L, respectively, in the range of light wavelengths. The brain receives from these three sentinels only warnings “receiving a lot” or “receiving just a little” waves coming. But alone, no one says “what” wave is coming. Very different from the situation in which the eardrum reports, in fact, “which,” in the sense that frequency, sound wave is coming. In the case of light, this is an inference that the brain makes from the comparison of information received from each of the three sentinels.

In short, the sound sensor is unique, but it monitors and reports the frequency of the wave it is receiving. Light sensors, individually, do not distinguish frequencies only send intensity information, but being 3 and each placed on a different piece of spectrum, the brain can differentiate the frequencies according to the intensity combinations of these three values.

Colors are more absolute

The consequence of this particular way of assessing the wavelength from 3 different channels in a limited range of the spectrum, is that the absolute positioning of the wave in the spectrum as a whole is much more accurate. Everything in our perception works best for contrast and detection of ranges than by absolute values. For example, it is easier to tell if an interval between musical notes is a tone, two or one octave, than to say what musical note specifically that sound is. Regarding the colors, thanks to this system with 3 different cones, the brain can be positioned quickly on a model of wavelengths representing what is reaching the retina. It creates the groups or ranges we call colors and has a considerable certitude about them. When only the S channel is showing signs we say that we have violet or blue. When only the L channel is showing signs we say red. The M channel sends signals for nearly the entire spectrum and when it is at its peak, L is also in a highly sensitive area. But M is roughly responsible for what we call green.

Consequence of this is that if I play a note on a flute for you today and again tomorrow, you’ll hardly be able to tell if it was the same note. But if I show you a red fabric today and another fabric tomorrow, you’ll be able to tell if the colors are repeated or not quite accurately. It is in this sense that the perception of color is more absolute than sound. Another example: it is possible to play a song in a higher or lower key preserving a great deal of the original music impression. Singers shift the notes to fit melodies into their vocal range. But if we have a displacement of the entire color spectrum in a photograph, while keeping the relative values in wavelenghts, that will hardly go unnoticed and may even conflict with our perception of the scene represented in color of familiar objects.

The wave itself is only vibration in different frequencies, it has no “color”, the colors are a construct of our brain. If we had only one type of cone, M, for example, the only thing we could say about the different parts of a scene or image, would be whether it is “light or dark”. As we have 3 channels, we say that our vision is tri-chromatic and we managed to create distinct regions and evaluate different wavelengths as groups and individually. Some animals, such as pigeons, have penta-chromatic vision and that gives them greater accuracy in positioning wavelengths absolutely. It is thought that this feature is important for the animals to allow them to distinguish prey, predators and food through their colors. For an animal with monochrome vision it would be difficult to tell if a fruit is ripe or not. The color remains visible and distinguishable in a wide range of light and darkness conditions. Noon to five pm, from shade to sun. We were able to realize a green even in quite varied lighting conditions. Monochromatic vision never knows whether it is a dimly lit green of a well lit blue, because the two may be translated into the same intensity. A chicken would probably starve if it had to evaluate each grain of corn by its form alone.

Physical and Human colors

Hitherto we considered as if the light we receive in our eyes had a single wavelength. This is only a theoretical condition that helps keep things simple at the beginning. Also musical instruments produce sounds with more defined frequencies and that helps when talking about sound frequencies, but most of what we hear is actually a composite of many frequencies not always musical ones.

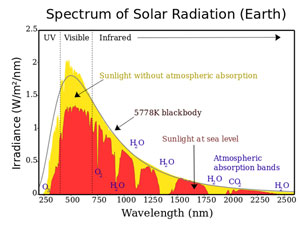

In both cases, light and sound, in fact we always receive a mixture or a composition of many wavelengths with varying intensities also. The light which has only one wavelength is what we call a spectral light. It would be a rainbow light or what we obtain through a prism decomposing white light. A common object, made of a certain material, and receiving and reflecting a certain light, which is projected on our retina when we form its image, is a compound of light in many wavelengths, each one with its own intensity.

The graph on the left (source Wikipedia) shows the sunlight distribution of irradiation x wavelength, ranging from UV to Infrared having the visible spectrum in the middle. Yellow is what comes out of the Sun and the red that reaches the Earth’s surface after being filtered by the atmosphere. We see that the entire spectrum is present and that the peak is at around 500 nm. This is the light that we call standard white light.

The light that an object reflects or emits, in terms of color, is what we call “physical color”. It would be represented by a histogram indicating the presence and relative intensity of all wavelengths present in that light. It would be a portion of an space represented by the intersection of infinite dimensions of wavelengths and intensities. This is the “physical color”. It is what in fact the object emits, no matter what we will do with it when that light enters through our pupil.

Here comes a fact that is fundamental to understanding color representation systems in situations such as digital cameras, film, printers and everything that deals with colors: No matter how complex and rich can be the light that enters our eyes, it will be translated and transformed into only three values by the cones S, M and L. That means that two objects emitting lights with different distributions may well result in the same triad of S, M and L, and we will say that the two objects have the same color. That color, we attributed to it is called the “human color”, which is nothing more than a translation of a “physical color” (a distribution of spectral colors) to 3 values that tells all that our visual system really wants to know about it.

This causes that in order to print a landscape of a sunset, we don’t need (and that is the industry’s salvation) to make the paper emit the same distribution of spectral colors that someone would receive by being there, looking at the sunset, at the time of shooting . The printed image will show a violent compression in luminosity range while preserving, with reasonable accuracy to our perception, colors translated into the tri-chromatic human values, which are then read through the cones S, M and L, corresponding to what someone would see at the actual sunset. That means, a particular shade of orange in the sky, in the actual scene, contained millions of light frequencies in a million different levels of intensity when crossing the pupil of an observer; when they arrived at the retina, they were translated only to three values of S, M and L; as a consequence, later, to the observer looking at the printed picture, it will suffice a much poorer frequency range, based only on cyan, magenta, yellow and black pigments and the white of paper, with a luminosity ranging only by a factor around a maximum of 100, because as long as this arrange produces the same 3 values S, M and L, photo viewer will immediately recognize the scene he had seen and rate color reproduction as true to life .

The fact that our perception of a distribution of light wavelengths is made by three monochromatic sensors, each in a different region of visible spectrum, causes that very simple luminous wavelength distributions, may cause the same effect as very complex ones. Actually, we see the same color for very different lights entering our eyes. This is key to any system you imagine to reproduce color images with some resemblance to what we would perceive looking at the scene that generated such images. Isn’t a remarkable fact, that only 4 dyes or pigments can reproduce on paper, images of various subjects in various light conditions with a faithful likelihood? It is the understanding of the tremendous simplification operated in first place by our own light catching devices that made it possible.

one click feed back:

was this article helpful? [ratings]