Systems for producing color images always work from a group of so-called primary colors or components. Other colors are obtained by mixing these primaries. In order to mix them, one must be able to vary the amount with which each one enters the composition of a final color.

The primary color group varies greatly and depends first on the physical possibilities of the medium that will display the colors. If it is white paper, on which you want to show a photograph, we have a situation completely different from a cell phone screen in which you want to show the same image.

Graphic representation of how a printer makes a light color by dithering the paper with small dots of primary colors. In this case a magenta.

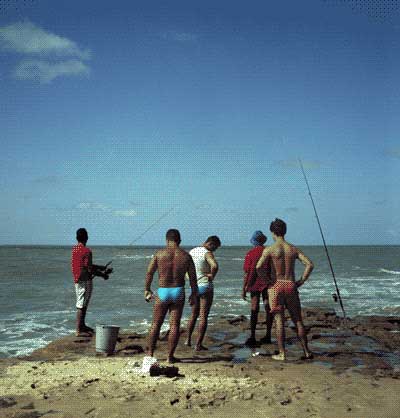

But in all situations there is one thing in common: there is a minimum and a maximum quantity with which a certain primary color can be included in the recipe of a “compound” color. If it is paper printed with an ink-jet the minimum will be obtained by the smaller droplets that the printer head can throw on the paper with the lowest possible density, provided that, in our eyes, the result will look like a continuous color – uniform and not formed by small dots. The maximum amount will be when the printer head throws so much ink that it will cover the paper completely. The limit will be the need for drying and avoiding the ink to flow onto printer parts.

A LED display, a cell phone screen or a tablet, follow a similar principle. The minimum color is when the corresponding LED is lit with the minimum intensity the hardware allows. Obviously the maximum color comes when it is light up and emitting the maximum of light energy in that color, according to the possibilities of that hardware. That is the maximum brightness.

In cases of digital images, when they are recorded in the form of a file, they must be able to activate those maximums and minimums for each primary color. For each pixel there will be a recipe with the quantities of each color varying between these limits. If you are not sure what a pixel is, read this article before you continue. When the file is read so that the corresponding image is displayed by a printer, or by a screen of a computer, the controller of these devices will yield the maximum and minimum that it is able and, proportionally, the intermediate values. The file is just a recipe. The physical color we see is made by the hardware / software interpretation of a given recipe.

The depth of color appears precisely when one asks the question of how many intermediate colors the file should keep between the maximum and the minimum. The question makes sense because a digital file only holds integers (zeros and ones, to be more exact). So you need to decide how many shades of red, for example, the file will be able to hold. If one says that for each color of the RGB (red, blue, green) system we will have 10 different tones going from minimum to maximum (which will actually depend on the system that will generate the colors), there will not be a tonality between red 2 And 3. It will always be either 2 or 3. There will be a “jump” from one color to the next in a ladder of intensities.

Now the question of bits. Why is the color depth expressed in number of bits? The point is that digital files are made of a huge sequence of bits, ones and zeros. Imagine that the primary color grades were stored in little bottles on a large shelf. Imagine that you would need to label these bottles to differentiate colors using only zeros and ones. If you use 2 digits you can create only 4 different labels: (0,0), (1,1), (0,1), (1,0). If you add a third digit you will double that number because you can do the same former 4 pairs starting now once with 0 and again with 1. You will thus have double it to 8 labels. Each digit you add will for the same reason double the number of labels and your color palette will grow exponentially (at base 2).

An 8-bit-per-channel RGB file with a total color depth of 24 bits (3×8) is a file that can store 28 = 256 different gradations for each of the primary colors. It’s like having 3 shelves with colored bottles with 256 variations from the weakest to the strongest for each. But note again that this is just information, whether the system reproducing physically these colors is capable or not of yielding so many variations within its own limits of intensity, that is another story.

The compound colors can go up to 256 x 256 x 256 and form a total of 16,777,216 different colors. Each pixel in that file uses 24 = 8 + 8 + 8 bits. Because a byte is an 8-bit set, it spends 3 bytes per pixel if not subject to any compression (that rarely happens).

With this amount of color our eyes and brain can no longer distinguish two neighboring tones in any direction, either by darkening or brightening one, two or three colors of the RGB pattern. A minimal variation, one step, between one color and the next, is not perceived by our vision.

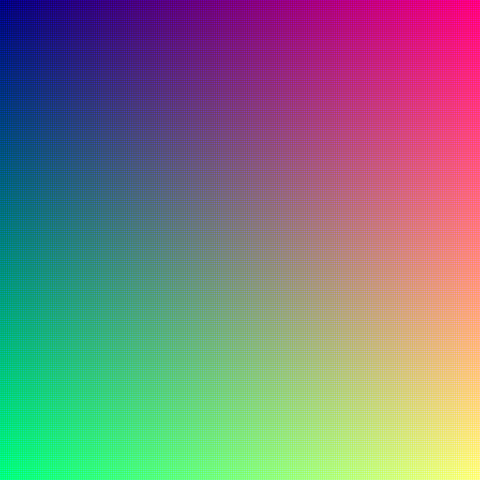

Above is a figure that theoretically should sample of what it means 16 millions of colors. I say a theoretically because as this figure has 480 x 480 pixels they can only juxtapose 480 x 480 = 230,400 pixels each one with a different color. Notwithstanding that, your screen driver may have thought it would do good in shortening this range even more and save memory. It would bet you won’t notice the trick and it does not know that our subject is exactly about showing the maximum of colors. Maybe you are even seeing a grid pattern, but that’s again because systems are designed to compromise memory saving and faithfully representing image files.

A 24 bit image is therefore already considered true color because it reproduces more colors than we can distinguish. It means that the digital nature that varies in steps and not in a continuous slope can no longer be perceived because the steps became negligibly small.

Important to stress again that one thing is what the file stores and another thing is what the binomial hardware / software shows. Manufacturers and developers process the information contained in the image files to their limitations and opinions as to what is possible and sufficient to render an image file well.

Even the point of the true color standard in 24-bit and 16 million colors, it is almost always interpreted and modified according to tastes and technologies; both ways in reading and recording. For instance, our eyes are less sensitive to variations in blue range than red or green, so some systems steal some bits from one range to another. This reduces the possibilities to blue shades and increases to other colors. The goal is about rendering the image more realistic. There is also a fourth alpha channel that defines pixel transparency if there is something “underneath” it and that channel also consumes a few more bits.

The purpose of this quick summary was to introduce the basic concept of what depth of color is. However, colorimetry is a very wide field and much can be optimized to save memory and increase the speed of image transmission without losing the impression of truthfulness. Be sure that developers of these technologies are very creative can achieve true miracles.

Also do not be fooled into thinking that with 24 bits we have already entered true color and then that is the limit. In fact there are files up to 48 bits. What is the point of keeping such subtle variations if they are not perceived by the human eye? On this you’d better read the article that talks about RAW files as these are a good example of when more bits can help and a lot.

Comment with one click:

Was this article useful for you? [ratings]